Molecular evolution: improve a protein by weakening it

![]() In the cartoon version of evolution that is often employed by critics of the theory, a new protein (B) can arise from an ancestral version (A) by stepwise evolution only if each of the intermediates between A and B are functional in some way (or at least not harmful). This sounds reasonable enough, and it’s a good starting point for basic evolutionary reasoning.

In the cartoon version of evolution that is often employed by critics of the theory, a new protein (B) can arise from an ancestral version (A) by stepwise evolution only if each of the intermediates between A and B are functional in some way (or at least not harmful). This sounds reasonable enough, and it’s a good starting point for basic evolutionary reasoning.

But that simple version can lead one to believe that only those mutations that help a protein, or leave it mostly the same, can be proposed as intermediates in some postulated evolutionary trajectory. There are several reasons why that is a misleading simplification - there are in fact many ways in which a mutant gene or protein that seems to be partially disabled might nevertheless persist in a population or lineage. Here are two possibilities:

-

The partially disabled protein might be beneficial precisely because it’s partially disabled. In other words, sometimes it can be valuable to turn down a protein’s function.

-

The effects of the disabling mutations might be masked, partially or completely, by other mutations in the protein or its functional partners. In other words, some mutations can be crippling in one setting but not in another.

In work just published by Joe Thornton’s lab at the University of Oregon, reconstruction of the likely evolutionary trajectory of a protein family (i.e., the steps that were probably followed during an evolutionary change) points to both of those explanations, and illustrates the increasing power of experimental analyses in molecular evolution.

The main focus of the Thornton lab is the reconstruction of the evolutionary pathways that gave us various families of steroid receptors. By combining phylogenetic inference (i.e., examining molecular “pedigrees” to infer the nature of an ancestral protein) and hard-core biochemistry and biophysics, Thornton and his colleagues can identify the likely ancestral proteins that gave rise to the modern receptors, then “resurrect” them in the lab and study their properties.

The reconstructions of receptor pedigrees have led to the following basic history of the receptors.

-

An ancestral receptor, present about 450 million years ago in a vertebrate, responded to two different kinds of steroid hormone at high sensitivity. This means that the receptor wasn’t very selective, but it was sensitive, so it didn’t take a lot of hormone to get a response.

-

The gene encoding that receptor was duplicated at some point, and the two resulting genes diverged to become specialized. One receptor (we’ll just call it the MR) retained many of the ancestral features: low selectivity, high sensitivity. The other receptor (the glucocorticoid receptor, which we’ll call the GR) became selective for one type of hormone (glucocorticoids, the kind of steroid that includes cortisol) but also became a lot less sensitive. And that is the current situation in all vertebrates, as far as we know.

Previous studies in Thornton’s lab outlined a likely trajectory through which the different receptors probably changed specificity. But the interesting loss of sensitivity remained unexplained. In the June 2011 issue of PLoS Genetics, Sean Michael Carroll and his colleagues in the Thornton lab tackle this question, uncovering an interesting type of genetic interaction that is a hot topic in evolutionary biology right now. Their paper is titled “Mechanisms for the Evolution of a Derived Function in the Ancestral Glucocorticoid Receptor.”

The authors already knew that all known GRs have low sensitivity. That includes GRs from the main types of vertebrates: tetrapods like us, bony fish like salmon and piranhas, and non-bony fish (called cartilaginous fish) like sharks and rays. They suspected that this meant that hormone sensitivity was first reduced in the common ancestor of all of those animals. To test this hypothesis, they first needed to examine GRs from animals throughout the vertebrate family tree, so they could infer the nature of the GR in the common ancestor. You might want to take a few minutes to examine the simple family tree of the receptors and of the various animals, presented in Figure 1 and reproduced below.

So the authors obtained GR gene sequences from four different species of shark (representatives of the cartilaginous fishes), then created the proteins in the lab and asked whether low sensitivity was a universal feature of cartilaginous fishes. Figure 2 illustrates the simple answer: yes, it is.

Each of the points on the graph represents the amount of hormone necessary to activate the receptor. The different colors represent different kinds of hormone, and we can focus on just one, let’s say the dark blue. The different animals are indicated across the bottom; on the left are ancestral receptors, inferred from looking at the family tree, and on the right are each of five cartilaginous fishes: a skate (previously known) and the four new contestants (the sharks). Notice that the amount of hormone needed to activate the receptor goes up with each step in the left-hand box, and is even higher in each animal in the right-hand box. It takes more hormone to activate the receptor - it became less sensitive, and seems to have acquired this characteristic a long time ago.

How long ago, and how would did the authors infer that? Well, they created a postulated reconstruction of the ancestral receptor - the receptor in the common ancestor - by combining knowledge of rates of change in proteins with the new knowledge gained by looking at four new animals (the sharks). The graph in Figure 2 shows the hormone sensitivity of this new reconstruction (it’s version 1.1) compared to an older reconstruction based on just one cartilaginous fish (that was version 1.0). The new “resurrected” ancestral receptor (AncGR1.1) has much lower sensitivity than its much more ancient ancestor (AncCR), the one with high sensitivity but no selectivity. So it seems that the change in sensitivity happened after the duplication event but before any of the various kinds of vertebrates diverged, something less than 450 million years ago.

But how did the change come about? Let’s look back at the family tree in Figure 1 to get oriented. The grandparent of all the receptors is called AncCR and it was sensitive but not selective. After the duplication, there were two parents, if you will: the MR parent which we’re not discussing, and the GR parent, called the AncGR. AncGR, the parent of all the GRs, had reduced sensitivity. Carroll and colleagues addressed the how question by first looking at the types of changes that occurred between the grandparent and the parent.

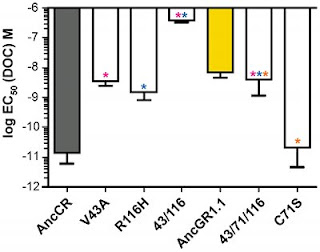

There were 36 changes that accrued during that time. The authors used some straightforward reasoning to narrow the list of suspects down to six. In other words, six different changes in the protein, together or separately, were likely to account for the change in sensitivity. They went into the lab and resurrected each of those mutant proteins, and measured their sensitivity. And their data tell a very interesting story, presented as a graph in Figure 3.

The gray bar is the grandparent. The yellow bar is the parent. (The scale is logarithmic, so the change in sensitivity from grandparent to parent is at least 100-fold.) The other bars represent the sensitivity of some of the mutations that must have generated the low-sensitivity parent. So, the first white bar is mutant 43. (The number represents a particular location of the mutation, but we’re not interested in that here.) That mutation drops sensitivity to near-parental levels. The next bar is mutant 116. It also reduces sensitivity to the parental level. Sounds good. But wait: both of those mutations are present in the parent. What happens when you put them together? Disaster. Look at the next white bar: it’s the combination of 43 and 116, and the receptor is effectively dead. As the authors put it in the abstract, “the degenerative effect of these two mutations is extremely strong.”

Think about what this means. There are two mutations in this transition that account for the loss of sensitivity to the hormone. Both are present in the final product (the parent receptor) but when they are introduced together, they drastically disable the protein. How, then, could this protein have come about?

Well, there were six mutations in the candidate pool. One of them creates mutant 71, the last white bar on the far right. By itself, mutant 71 doesn’t affect sensitivity. But when mutant 71 is present with the deadly 43/116 combination (see the next-to-last bar), the result is a receptor with the parent’s characteristics. Lower sensitivity, but not complete loss of function.

Carroll et al. dissected the biophysics of these resurrected receptors, and provided a clear explanation for the influence of each mutation in terms of effects on stability of the hormone-receptor complex. Here’s how they summarize the results:

We found that the shift to reduced GR sensitivity was driven by two large-effect mutations that destabilized the receptor-hormone complex. The combined effect of these mutations is so strong that a third mutation, apparently neutral in the ancestral background, evolved to buffer their degenerative effects.

That third mutation almost certainly occurred first. Recall that it has no apparent effect on function by itself. Carroll et al. refer to its effect as “buffering” because its presence reverses the destabilization caused by the other two mutations.

Here are some closing comments on the paper.

-

It’s nice to keep in mind that we can design experiments to test hypotheses concerning evolutionary trajectories. We can move beyond simplistic and distorted models (if we want to understand evolution, that is).

-

The reduction in function of a protein is not necessarily a “degenerative” change. In the case of the GR protein, lower sensitivity to hormone is likely to create the opportunity for different responses to different levels of steroids.

-

Mutations that are damaging - even devastating - in some circumstances can be harmless or even beneficial in others. On reflection, that should be obvious, but it’s a simple fact that seems easy to overlook.

Several recent papers have converged on this basic point: interactions between different proteins, and between different mutations in one protein, exert significant influences on the nature and direction of evolutionary change. The key word here is ‘epistasis,’ and it’s a topic we should return to.

[Note: the post first appeared Monday at Quintessence of Dust. Joe has seen it, and I asked him for comments and/or critique, to be posted here by him or me.]

Carroll SM, Ortlund EA, and Thornton JW (2011). Mechanisms for the Evolution of a Derived Function in the Ancestral Glucocorticoid Receptor. PLoS Genetics, 7 (6) : 10.1371/journal.pgen.1002117